Introduction

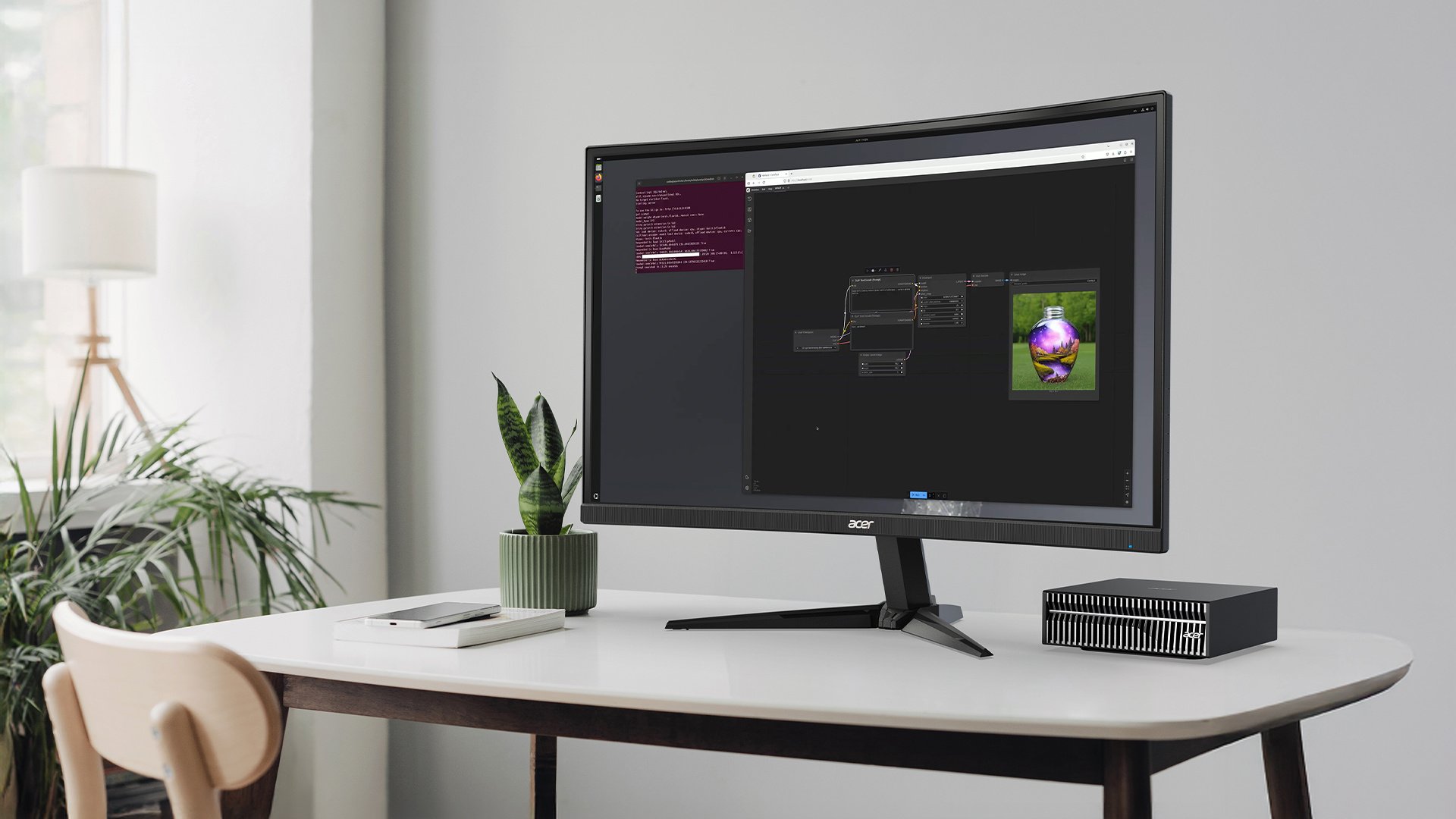

Artificial intelligence doesn’t have to live in the cloud or come with hyperscale costs. The Acer Veriton GN100 brings workstation-grade AI to your desktop, powered by NVIDIA’s Grace Blackwell GB10 Superchip, it delivers petaFLOP-class performance that enables developers, universities, data scientists, and researchers to run large language models, AI agents, and computer vision workloads efficiently and securely - all on local hardware. This makes high-throughput, cost-efficient AI accessible without relying on expensive, continuously billed cloud infrastructure.

Misconceptions & Reality

However, the issue is that many still assume that serious AI development requires massive cloud bills or hyperscale servers. Some other possible issues include:

- AI is only possible in the cloud: Traditional thinking equates powerful AI with huge data centers, making developers believe local machines can’t handle modern AI workloads.

- Running large models locally is prohibitively expensive: High-end GPUs and specialized hardware are often seen as cost-prohibitive, reinforcing the idea that AI experimentation is reserved for large companies.

- Only full-scale training is valuable: Many assume that without training massive models from scratch, local AI workstations have limited value.

These days, that couldn’t be further from the truth. The reality is that running large language models, AI agents, or computer vision workloads locally can now be more cost-effective than paying per-token cloud fees, while at the same time giving teams more control over data, security, and workflows.

AI Workstations like the Acer Veriton GN100 are at the forefront of challenging these perceptions. Powered by the NVIDIA Grace Blackwell GB10 Superchip, the GN100 delivers up to 1 petaFLOP of FP4 AI performance, enabling high throughput, lower energy use, and reduced cost per action, all from its mini PC form factor. With 128 GB unified memory and up to 4 TB NVMe storage, it can handle models up to 200 billion parameters, scaling to 405 billion parameters when two units are linked. This allows simultaneous utilization of large pre-trained or distilled LLMs in the 70B–120B range, including models such as DeepSeek R1 (70B parameters), gpt-oss (120B parameters), or comparable instruction-tuned and distilled LLMs optimized for local inference.

The Veriton GN100 is fully capable of operating as a standalone workstation or a network-connected AI resource, making it an efficient way to offload workloads that exceed standard office PC capabilities. What previously required data-center-scale hardware can now run locally, securely, and efficiently, empowering teams to experiment, innovate, and deploy AI on their own terms.

FP4 & Cost-Efficient Inference

As outlined above, much of the Veriton GN100’s advantage comes from how efficiently it can run modern AI models. A key part of this efficiency is numerical precision.

While FP16 has long been the standard for training and high-accuracy tasks, and FP8 offers a balanced mix of performance and precision for large-model inference, FP4 pushes efficiency even further. FP4 uses half the bits of FP8 and a quarter of FP16, allowing models to run faster, use less memory, and deliver significantly higher throughput - all while maintaining useful model accuracy for inference.

The Veriton GN100 is engineered to take full advantage of this. With support for FP4 and FP8 precision, it delivers high-throughput inference for large language models and autonomous agent workflows by reducing memory requirements and accelerating core computation. FP4’s ultra-low precision dramatically boosts token throughput and lowers power consumption, allowing the GN100 to deliver more inferences per second and more actions per watt than traditional FP16-based systems - a distinct advantage when trying to take advantage of local AI hardware.

Potential Use Cases

Now that high-throughput inference is possible at the local level, the Veriton GN100 enables practical AI applications in a variety of different environments. Here are a couple:

Startups: Low Barrier of Entry for AI Applications

Startups especially are able to take advantage of the Veriton GN100, as it offers a low-cost path to AI adoption by removing the need for ongoing cloud fees. Its high-performance local compute enables teams to prototype, test, and refine AI applications affordably - making it easier to innovate and scale without the financial barriers typically associated with cloud-based development.

Businesses: Local AI Agents

Organizations can deploy local AI agents to fulfill a variety of functions - from data analysis and predictive modeling to image, video, and audio processing. Powered by pre-trained models designed for tasks such as summarization, detection, or classification, these agents run entirely on-premise, keeping sensitive information secure without relying on external cloud services. Thanks to high-throughput FP4 inference, the Veriton GN100 delivers fast, predictable performance for continuous agent loops and multi-agent applications, turning advanced AI workloads into fixed, controllable costs.

Education: Research and Experimentation

Universities, labs, schools, and more can use the Veriton GN100 as a shared AI resource for researchers, scientists, and students alike as they explore pre-trained LLMs specialized for fields like biology, engineering, or data science, along with computer vision and generative AI models. This enables academic teams to experiment, prototype, and run advanced workloads securely on campus infrastructure, reducing reliance on cloud budgets and giving hands-on experience with modern AI tools and skills.

Developer Ecosystem & Resources

The value of the Veriton GN100 extends beyond hardware performance. NVIDIA’s developer ecosystem provides a comprehensive set of tools, playbooks, and workflow examples that help teams make the most of local AI deployment. Through resources available at their DGX Spark website, developers can access optimized model runtimes, sample pipelines, and task-specific configurations designed to accelerate experimentation and development.

These resources make it easier to deploy custom agent workflows without starting from scratch. Whether used as a standalone workstation or a shared node in a larger lab environment, the Veriton GN100 benefits from NVIDIA’s continuously expanding software stack - enabling organizations to prototype, refine, and scale AI workloads directly from this mini form factor PC, clearing the way for eventual adoption of advanced servers or even cloud infrastructure.

Conclusion

All these things taken together, the Acer Veriton GN100 angles itself to bring high-performance AI to the desktop, while making advanced workflows both accessible and cost-efficient. By running pre-trained LLMs, customized AI agents, and computer vision tasks locally, teams can achieve high throughput, reduced energy use, and lower operational costs - all without relying on cloud infrastructure.

With its miniature form factor, scalable architecture, and support for FP4/FP8 inference, the Veriton GN100 demonstrates that AI is no longer confined to hyperscale servers. AI is no longer a distant dream - it’s an accessible, affordable path for businesses, developers, and creators to realize more innovation and creation. Local AI workstations now empower organizations to experiment, innovate, and deploy intelligent solutions securely, efficiently, and on their own terms.

Related Products